Is cloud, colocation or hybrid computing most cost-effective

The advent of cloud computing has presented numerous benefits to businesses, offering large economies of scale and unmatched growth for digital enterprises. For artificial intelligence (AI) startups, the hyperscale cloud can seem highly attractive, offering raw CPU compute, countless processor and memory configurations, untapped storage, and global connectivity - all accessible from a user’s credit card.

In many respects, companies such as Microsoft, Amazon Web Services (AWS), Google and Tencent have made it very easy for businesses to get started, and much simpler to scale. Both of which are characteristics essential to AI startups.

As such, many Startups have been quick to realise the benefits of deploying workloads via the cloud, where IT infrastructure loaded with GPU-accelerated computing and heavy-weight CPUs is far more immediately accessible than purchasing, installing, configuring, and deploying on-premises.

Indeed, many have seized the opportunities presented through AI as a Service (AIaaS) models, which offer deployments on tap. Yet as machine learning models become more complex and the size of their datasets begin to grow and scale, the computational requirements can spiral. What’s more, an organisation using one of the cloud providers as its compute platform will create a knock-on effect of increasingly higher costs.

Although no AI entrepreneur sets up their business with the aim of becoming the cloud provider’s personal bank account, they have traditionally been resistant to investing in owned on-premises HPC. No young business wants the cost of high-performance IT ownership on its balance sheet, but many don’t realise just how quickly costs will escalate when using cloud provisioning or depending on contracts, how difficult switching providers and cloud infrastructure can be. It can happen to the best of us – we’ve all heard about the NASA scientists who failed to factor in the data retrieval costs for their cloud contract, so it pays to be diligent.

Inflection Point

Away from the flashing lights of the cloud is another alternative - colocation. And what’s lesser-known is that it can be far more economical for AI startups to switch their workloads from the cloud to a colocation provider, especially once 50% workload utilisation is reached.

As such, deploying AI hardware within a colo presents a host of benefits that cannot be realised from the cloud, including dedicated on-premises infrastructure, increased workload speed and performance, and significantly reduced cost. But just how much can an AI Startup save?

Contrary to popular belief, cloud providers are not at the cutting edge of all technologies. Given the rapid technological changes that underpin the AI sector, partnering with a specialist colocation data centre and hosting the latest infrastructure, can justify the investment over the technology commonly available on the public clouds - especially when it offers 10X the performance for, potentially, more than 70% less cost.

The inflection point, where using industrial-scale colocation offers greater value than cloud will of course differ for each Startup. However, there are some fairly general principles to follow.

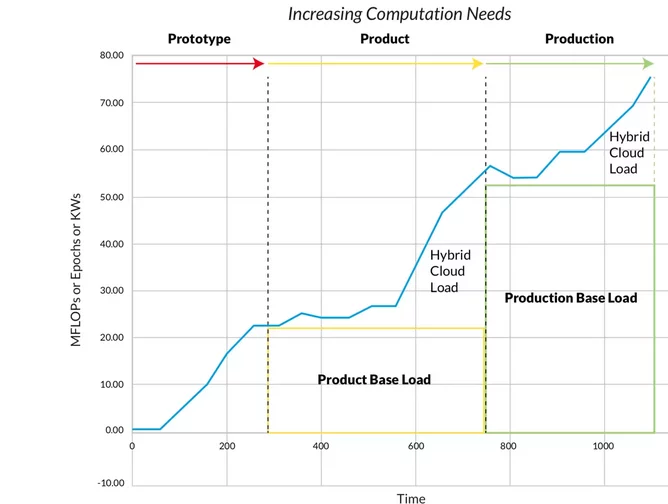

AI startups and established heavy data using organisations pay for the capacity they use, which at the beginning of each project will be fairly small. However, as they move through the design, prototype, training, and production phases, that capacity will need to grow. Anticipating and managing this scale can be challenging enough, without navigating the myriad of cloud provider challenges, including spiralling costs, data egress charges and cloud lock-in. Therefore, understanding a project’s data use cycle is essential for small as well as large AI-led users.

If an AI Startup is looking for genuine HPC performance, they will gain higher speed and performance from a cluster of dedicated, interconnected servers, nodes and GPUs in a colocation facility, as opposed to a 100,000 server data centre or multiple region cloud. This will also avoid sharing resources with any noisy neighbours in the cloud. And once an AI workload is in full production, predictable performance is crucial.

Hyperscale clouds are excellent at providing flexibility when you’re not sure how much you will be utilising your compute. The clouds ‘pay-as-you-go’ models and tariffs enable users to only pay for what you use (although you pay a premium for this). However, once your compute reaches a reliable 50% (or greater) utilisation, flexibility isn’t the main driver any longer. We’re now squarely in the ballpark of keeping costs down as the compute platform scales.

Therefore, as a rule of thumb, once you reach 50% utilisation it will become far more economical to have the baseload running on physical servers hosted within a high-performance colo, and then absorb production peaks by bursting into the public cloud. See the graph below.

Diagram 1

Put Your Money Where Your Mouth Is!

For an AI Startup, managing costs is crucial, so we recently worked with Timothy Prickett-Morgan at The Next Platform to model and test the costs of using the cloud, versus dedicated, high-performance colocation.

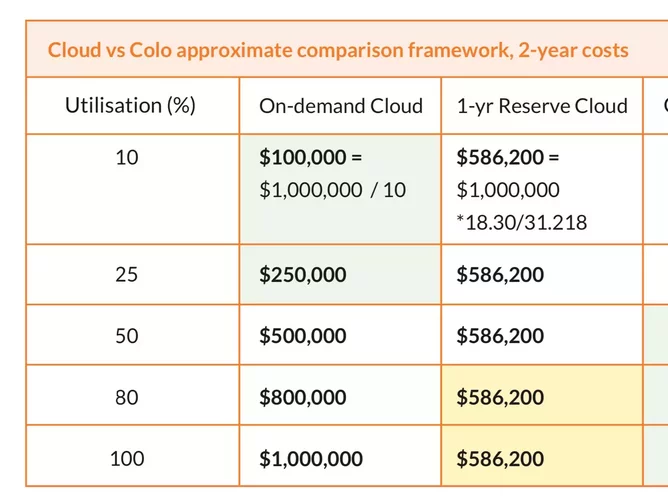

Taking an NVIDIA DGX-1 and an eight GPU instance running on Amazon Web Services (AWS) as our example, the cost of running a DGX-1 and its storage within a colocation facility is around $238,372, based on a two-year straight-line depreciation, which equates to around $10,000 per month. Add in a further $2,000 per month, for 10kW of power and rent.

Using AWS, a DGX-1 equivalent instance, the p3dn.24xlarge costs $273,470 per year on-demand and $160,308 on a one-year reserved instance contract. Add in AWS storage services and an AI Startup may expect to pay around $1,000,000 for this capacity over two years, a pretty hefty price tag!

Diagram 2

To be fair, utilisation rates matter when comparing colocation and cloud provisioning, therefore, the below table demonstrates 2-year costs when scaling from 10% to 100% utilisation. We can easily see that at low utilisation the cloud appears to offer far more reasonable cost, but the price escalates quickly as utilisation grows.

Diagram 3

Many AI startups may focus on eliminating capital expenditure (CapEX) in the short term or jump to a decision based on the cloud provider’s sales pitch, rather than plan for longer-term cash flow, performance, and profitability. But what’s clear, however, is that before renting any GPU instance on a cloud, an AI Startup must consider its computational evolution and the impact of costs across all stages of its lifecycle.

Ultimately, AI startups need flexibility, agility, speed and performance, which means that the right approach is to be clever with both your budget AND your computer.

By taking a different and more considered approach and using a hybrid model, encompassing colocation for performance and cost, and cloud for scale and bursting, AI Start-ups can avoid cloud lock-in and keep control of costs - potentially saving up to 70% by choosing to deploy on-premises or in a high-performance colocation facility, versus the cloud. Moreover, whether a small or large organisation, understanding your data best practices for data-heavy, AI and machine learning workloads will allow you to disaggregate your data and utilise your colo for intensive workloads and non-data heavy compute in the cloud.