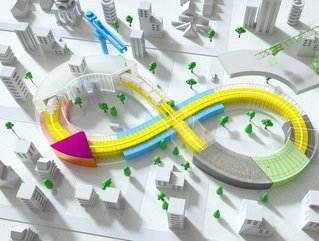

To infinity and beyond for AI agents taking the long view

Researchers from a group of organisations including MIT and the MIT-IBM Watson AI Lab have developed a machine-learning framework that enables cooperative or competitive AI agents to consider what other agents will do over a great deal of time, not just over a few next steps.

This means the agents then adapt behaviours accordingly to influence other agents’ future behaviours and arrive at an optimal, long-term solution, and researchers say this framework could be used by a group of autonomous drones working together to find a lost hiker in a thick forest or by self-driving cars that strive to keep passengers safe by anticipating future moves of other vehicles driving on a busy highway.

“When AI agents are cooperating or competing, what matters most is when their behaviours converge at some point in the future,” says Dong-Ki Kim, a graduate student in the MIT Laboratory for Information and Decision Systems (LIDS) and lead author of a paper describing this framework. “There are a lot of transient behaviours along the way that don’t matter very much in the long run. Reaching this converged behaviour is what we really care about, and we now have a mathematical way to enable that,”

The senior author is Jonathan P. How, the Richard C. Maclaurin Professor of Aeronautics and Astronautics and a member of the MIT-IBM Watson AI Lab. Co-authors include others at the MIT-IBM Watson AI Lab, IBM Research, Mila-Quebec Artificial Intelligence Institute, and Oxford University. The research will be presented at the Conference on Neural Information Processing Systems.

Multiagent reinforcement learning by trial and error

The researchers focused on a problem known as multiagent reinforcement learning. Reinforcement learning is a form of machine learning in which an AI agent learns by trial and error. Researchers reward the agent for “good” behaviours that help it achieve a goal. The agent adapts its behaviour to maximize that reward until it eventually becomes an expert at a task.

But when many cooperative or competing agents simultaneously learn, things become increasingly complex. As agents consider more future steps of their fellow agents and how their own behaviour influences others, the problem soon requires far too much computational power to solve efficiently. This is why other approaches only focus on the short term.

“The AIs really want to think about the end of the game, but they don’t know when the game will end,” says Kim. “They need to think about how to keep adapting their behaviour into infinity so they can win at some far time in the future. Our paper essentially proposes a new objective that enables an AI to think about infinity.”

It is impossible to plug infinity into an algorithm, so researchers designed their system so agents focus on a future point where their behaviour will converge to a general concept called an “active equilibrium.”

The machine-learning framework they developed, known as FURTHER (which stands for FUlly Reinforcing acTive influence witH averagE Reward), enables agents to learn how to adapt their behaviours as they interact with other agents to achieve this active equilibrium.

“The challenge was thinking about infinity,” says Kim. “We had to use a lot of different mathematical tools to enable that, and make some assumptions to get it to work in practice.”

Researchers tested their approach against other multiagent reinforcement learning frameworks in several different scenarios, including a pair of robots fighting sumo-style and a battle pitting two 25-agent teams against one another. In both instances, the AI agents using FURTHER won the games more often.

While games were used in the testing phase, researchers say FURTHER could be used to tackle any kind of multiagent problem, including economists seeking to develop sound policy in situations where many interacting entities have behaviours and interests that change over time.